How to design AI interfaces: tools, insights, and reflections

As AI systems become increasingly integrated into our lives, the question of how to design human-AI interactions is becoming both more important and more tangible. Until now, to develop any kind of product interface (whether physical or digital), most designers would generally follow this standard process: identifying user needs, defining product functionalities and workflows, and creating interfaces that align with the brand’s vision. However, AI interfaces fundamentally disrupt this design process.

Unlike traditional systems, AI introduces dynamic, adaptive interactions that evolve over time and generate a virtually infinite variety of outcomes, many of which are unpredictable even to the designers themselves. Think of a chatbot, for example: how can designers consider all the possible interactions and workflows that users might encounter? How will they know all the possible errors/misunderstandings that could occur, and how to recover from them? Or think of Generative AI tools: how do designers ensure users quickly understand all the functionalities of the system, what its limitations are, and how to use it effectively?

These examples point to three key challenges for designers working with AI:

- Overwhelming functionalities: With AI capable of performing an extraordinary number of tasks, it becomes challenging for designers to effectively understand and communicate to their users what the AI can and cannot do. This often results in either steeper learning curves for users or poor understanding regarding the system’s capabilities and limitations.

- Unpredictable Interactions: AI systems handle countless input-output combinations, making it impossible to test or control every possible scenario.

- Opaque Mechanisms: Many designers struggle to understand how user inputs translate to system outputs due to the complexity of AI models. This lack of clarity can hinder their ability to create meaningful, intuitive, and reliable interfaces.

Useful tools

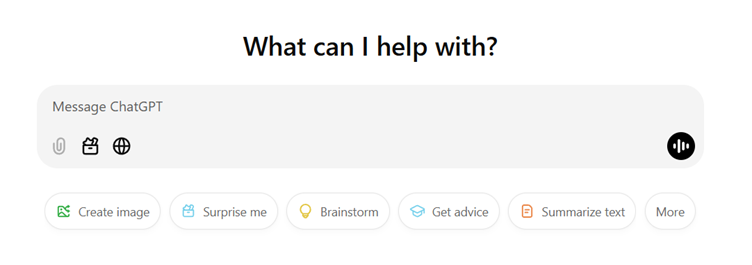

To deal with these challenges, designers need new tools and approaches. One resource that helps address these issues is the Microsoft HAX Toolkit, an open-source tool designed for creating human- AI experiences.

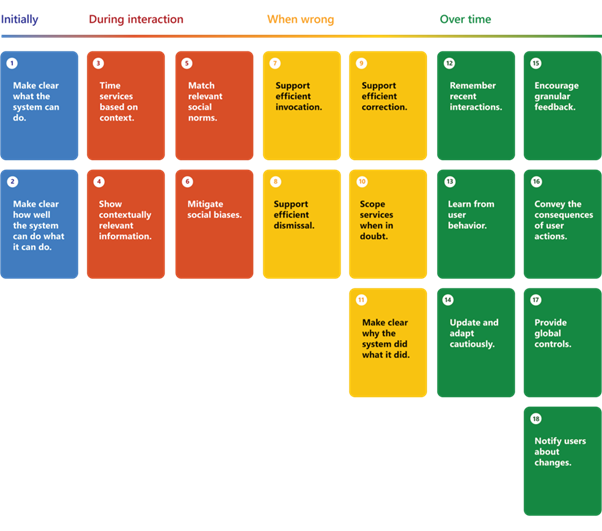

The toolkit offers 18 design guidelines, each presented as a card that aligns with a specific stage of the user journey. Each card gives a short explanation, which can be expanded for more detail. The guidelines are paired with practical design patterns and examples from real AI products, making it easier to see how they can be applied.

The HAX Toolkit is especially helpful for UX and UI designers working to understand and address key interaction points in AI systems. It provides clear and actionable guidance to ensure that critical touchpoints are deliberately designed and user-friendly. However, it focuses primarily on refining the end-product experience, leaving designers to tackle higher-level decisions such as defining which kinds of human-AI interactions to allow and design for.

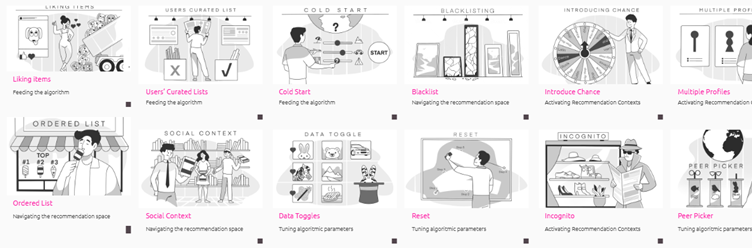

If you’re looking to tackle those high-level design challenges, the Algorithmic Affordances pattern library offers a valuable framework, particularly for designing recommender systems. Developed by the Human Experience & Media Design group at the University of Applied Sciences Utrecht, this tool goes beyond just interface design. It helps define meaningful user interactions with algorithms while considering both user needs and ethical aspects.

Building on this tool, a new European-funded project launched in October 2024, bringing together over 30 researchers from academia, research institutes, and design professionals. The goal is to create an expanded, comprehensive algorithmic affordances pattern library that combines academic rigor with industry standards and is tested on a large scale. And what’s even more exciting is that Rhite is also involved in this important project! We are really proud to contribute to shaping such a relevant and interesting tool that will have a lasting impact on AI design.

Looking ahead

To prepare for the future, it’s important for designers to reflect not only on the tools we use but also on the deeper principles guiding AI design. Understanding key topics at the crossroads of philosophy and practical application will help shape more thoughtful and responsible AI. Some areas worth exploring include:

- Human Agency

- Explainability and Transparency

- User Perception

Here is a short reflection on each of these topics, intended to encourage critical thinking and spark ideas on AI-human interactions.

Human Agency

Human agency is a crucial aspect of how users engage with technology. AI systems, particularly in content recommendation, often personalize content based on either passive data collection (where the system quietly collects data based on the user’s behaviour and profile) or active data collection (where users provide feedback on the content they see or would like to see). While passive systems offer little to no control, active systems seem to give users more agency. In fact, the intense personalization typical of these AI systems can create the impression that users have an exceptional degree of control over the technology. This feeling of empowerment, however, may be misleading: even in active data collect systems, how much control do users really have when they offer feedback?

For example, when giving a “like” to content, users do not truly understand how their action will affect future recommendations. Should users then be able to specify the level of ‘likeness’ (e.g., on a scale from 1 to 10)? Could they provide more details about what aspects they liked specifically (e.g. the topic, the creator, the approach…)? What if users had the option to temporarily opt-out of certain recommendations, especially if they gave a “like” just to support a specific piece of content but don’t want more similar content in the future? But while this seems like it would give more agency, it could also overwhelm users with options.

The idea of giving users more control sounds ideal, but if we were to ask users to be as detailed and specific, would this improve their experience, or simply create a more time-consuming, complicated interaction?

Explainability & Transparency

AI systems are often described as “black boxes” because their decision-making processes are hidden or too complex to explain. This opacity creates mistrust and confusion, especially when systems behave unpredictably. Additionally, consider the iconography often associated with AI: sparkles and magic wands, suggesting a mysterious, almost supernatural power. While this imagery is compelling, it’s misleading and contributes to a dangerous narrative.

In reality, AI systems are created by developers with specific objectives in mind, using carefully calculated mechanisms to achieve those goals. Just because those goals and decisions are not explained to the final users, it doesn’t mean that they were not deliberately designed. Every decision made during the development of an AI system, from sourcing training data to building the algorithm, directly impacts how the system operates. These elements are essential for users to make informed choices about their interactions with the technology, and designers are responsible presenting this information to them in a way that is accessible and understandable. But here’s where the balance gets tricky: while transparency and explainability are essential to build trust, it’s unrealistic to expect users to understand every detail behind every interaction with an AI. Overloading them with technical explanations could make systems more intimidating and less accessible, especially for those with lower digital literacy.

So, once again, where do we draw the line? More transparency might empower users, but it could also make systems more overwhelming or less efficient. Are more transparent systems inherently less user-friendly? Or can we design AI that is both explainable and intuitive?

User Perception

AI stands apart from other technologies in how people perceive it. When interacting with physical products, users generally understand their limitations. A toaster burns bread? Likely a design flaw or a malfunction. But with AI, expectations are less clear. Many people view AI as either infallible or inherently flawed, often depending on their prior exposure to it.

This dichotomy creates problems. When AI performs well, users might overestimate its capabilities, believing it can solve any problem. Conversely, when AI makes mistakes, especially on tasks humans find trivial, users may dismiss it as unreliable.

In addition, human-like interfaces complicate matters further. Chatbots and virtual assistants often mimic human traits, encouraging users to attribute human-like intentions to them. This can lead to over-reliance on AI, with users assuming it understands them better than it does. Such misplaced trust opens the door to manipulation, both on an individual and societal level.

Goodbye, human interactions?

Let’s finish on a bit of a downer, shall we? We talked about how we could shape AI interactions, but what about how AI interactions are re-shaping us? Many AI systems, particularly conversational bots, are designed to be unfailingly polite and accommodating. They respond to rude language with patience and disturbing requests with neutrality. While this consistency enhances user experience, it may also set unrealistic expectations for human interactions.

If people become accustomed to the unwavering politeness of AI, they might begin to expect the same behaviour from human interactions, which could lead to disappointment or frustration. This shift could also contribute to a decline in social and emotional skills, making it more difficult for individuals to navigate conflicts and understand the nuances of human interactions. Eventually, as the use of AI becomes more and more frequent both in personal and professional contexts, will individuals prefer to interact with AI over humans?